The “Data Mesh” concepts are discussed in many different places recently, so I decided to read the “Data Mesh: Delivering Data-driven Value at Scale” reference book, by Zhamak Dehghani.

“This book creates a foundation of the objectives of data mesh, why we should bother, its first principles. We look at how to apply the first principles to create a high-level architecture and leave you with tools to execute its implementation and shift the organization and culture“, as the author wrote.

First off, what is Data Mesh?

Data Mesh is an analytical data architecture and operating model where data is treated as a product and owned by teams that most intimately know and consume the data.

Note: The book focuses on analytical data (as input to AI/ML) but it makes a distinction between operational data and analytical data, the latter being deduced from the former.

Why read that book? As I am trying to solve the vision of Autonomous Networks, the “data” topic is of interest to me. Obviously, we need much data to detect SLA violations and network anomalies, find out the symptoms & root cause, and to enable closed-loop automation … with the AI/ML help.

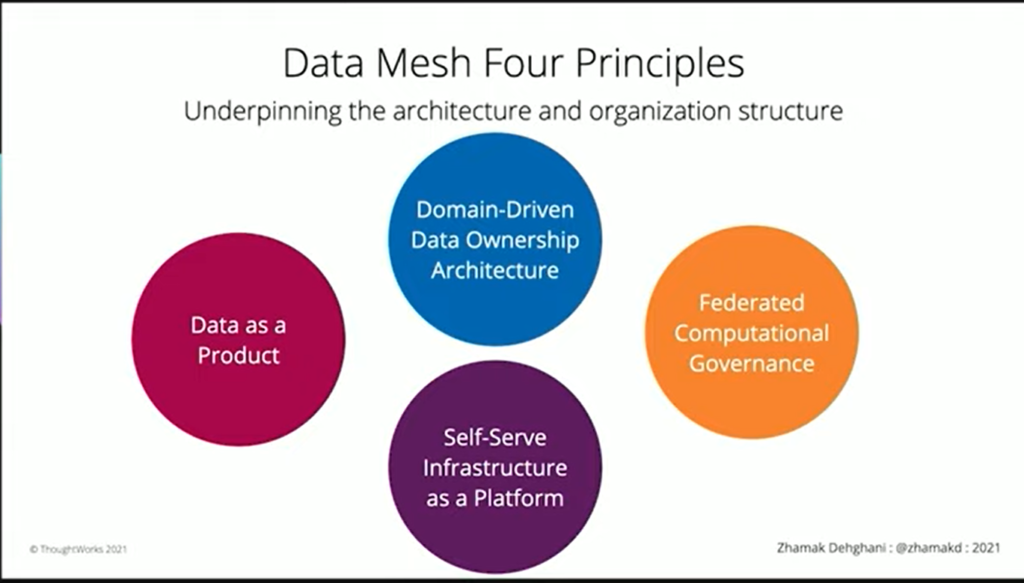

The following picture represents the Data Mesh four principles. This screenshot comes from the Zhamak Dehghani | Kafka Summit Europe 2021 Keynote, an 1/2 h video (in case you don’t have to time to read the entire book … or as a teaser to read the book).

- Principle of Domain Ownership: Architecturally and organizationally align business, technology, and analytical data, following the line of responsibility. Here, the Data Mesh principles adopt the boundary of bounded context to individual data products where each domain is responsible for (and owns) its data and models.

- Principle of Data as a Product: The “Domain” owners are responsible to provide the data in useful way (discoverable through a catalog, addressable with a permanent and unique address, understandable with well defined semantics, trustworthy and truthful, self-describing for easy consumption, interoperable by supporting standards, secure, self-contained, etc…) and should treat consumers of that data as customers. This is the most important principle in my opinion, the one the (networking) industry has been missing so much. Obviously, it requires and relies on the “Domain Ownership” principle.

- Principle of Self-serve Data Platform: This fosters the sharing of cross-domain data in order to create extra value.

- Principle of Federated Computational Governance: Describes the operating model and approach to establishing global policies across a mesh of data products.

While this book focuses primarily on typical end user applications, services, and microservices (there is, in the prologue, a nice example of a spotify-like company transforming itself into a data mesh architecture), those principles resonate in my networking world, where we have been facing similar challenges. Just to name a few…

- Domain Ownership. Networks are composed of different domains: campus, metro, core, cloud. Have you noticed how we always blame the networks when the end-to-end Quality of Experience (QoE) is impacted? Well, as we know, the “network” is not always the guilty one. Also, there are, potentially as a consequence of the previous point, different roles: network engineer, applications engineer, data center engineer, cloud engineer, etc., each having its own set of responsibilities, and working in more or less coordinated manners. Thinking further … there are different use cases, which could also be considered as domains: verification and troubleshooting, anomaly detection, capacity planning & trend detection, closed loop automation, etc. If we speak purely about networking, we have different views as well: the control plane (where the traffic is supposed to go), the data plane (where the traffic is actually going), and the management plane.

All of these have their own bounded context. - Data as a Product. What if each domain & engineer & use case & view (you-name-it) could prove, with his own data set, that it is not responsible for the “service” degradation? Sending data is easy (here are all my IPFIX flows, my interface counters, or even my BMP data). Sending aggregated data as proofs is actually more difficult, but the responsibility of each domain … and most importantly for the network domain (Remember: it’s always the network fault!). Now, let’s assume the specific domain is actually responsible for the fault/degradation, following this “data as a Product” principle will help to quickly identify & resolve the problem.

- Data as a Product. Which model(s) to use, knowing that data models in the core network are not the same as the models for a cloud application, and rightly so? How to aggregate? What about about GDPR? Do we need anonymization for IP addresses, ports, applications? etc.

- Data as a Product. On the AI/ML data scientist front, we know the challenges: data, more data, at high frequency, with semantic information, and ideally “labeled” data flagged with some anomalies. Before the data scientists receive the data on a golden plate, there are networking-specific challenges:

– how can we stream enough telemetry data to have full view of the network?

– how can we stream, along with the data, the semantic information (ex: could we keep the YANG data types up to analytic tools)?

– how can we stream, along with the data, some more metadata: the platform and data collection contexts (called “manifests” in A Data Manifest for Contextualized Telemetry Data), the routers capabilities during design and implementation times, etc.

– shall we standardize on a some network Key Performance Indicator (KPI)? Can we agree on a SLA definition based on (the composition of) those KPIs?

– how can we provide to the data scientists the relationships between objects, to help with their analysis?

– etc. - Principle of Self-serve Data Platform: which north-bound API and data models shall we use? How to reconcile entities in different domains?

Note: on the last point, I learned a new term that will make me shine in society: “polysemes are shared concepts across different domains

We knew already that data was key and we now understand that the networking industry must evolve towards a Data Mesh architecture to solve the Autonomous Networks vision (and I have not mentioned Digital Map and Digital Twin yet). This requires our networking industry to dedicate time & effort on a few missing building blocks, in standard, research, and opensource. Stay tuned for some more news here.