Back from an intensive IETF week (actually 2 days of hackathon during the week-end followed by the actual IETF week), it’s time to reflect!

Maybe less participants on site, but with energy levels not seen for some years, we had a long series of back to back meetings. What makes me pretty happy is the renewed importance of running code, at least in my Operations and Management (OPS) part of the standardization. And I have great hopes: the renewed focus on applying our IETF moto “Rough consensus and running code”. Running code from which we validate the specifications, we learn, and update our specifications as a consequence.

As an example, let’s look at the Network Management Operations (NMOP) Working Group, which I described in this blog post. Citing the WG charter, the current topics of focus are:

- NETCONF/YANG Push integration with Apache Kafka & time series databases

- Anomaly detection and incident management

- Issues related to deployment/usage of YANG topology modules (e.g., to

model a Digital Map) - Consider/plan an approach for updating RFC 3535-bis (collecting

updated operator requirements for IETF network management solutions)

This NMOP WG is maybe special as its charter clearly mentions the notion of “experiments”.

Discuss ideas for future short-term experiments (i.e., those focused on incremental improvements to network management operations that can be achieved in 1-2 years) and report on the progress of the relevant experimentation being done in the community.

Excluding the chartered topic (“Consider/plan an approach for updating RFC 3535-bis” will by the way turn into an IAB workshop on the Next Era of Network Management Operations … more on this later), all topics are experiments. “Experiments” in the sense that they require running code and, at the same time, protocol and architecture specifications, as we learn. Thoose topics/experiments were all part of the IETF hackathon. Let’s review them one by one.

NETCONF/YANG Push Integration with Apache Kafka & Time Series Databases

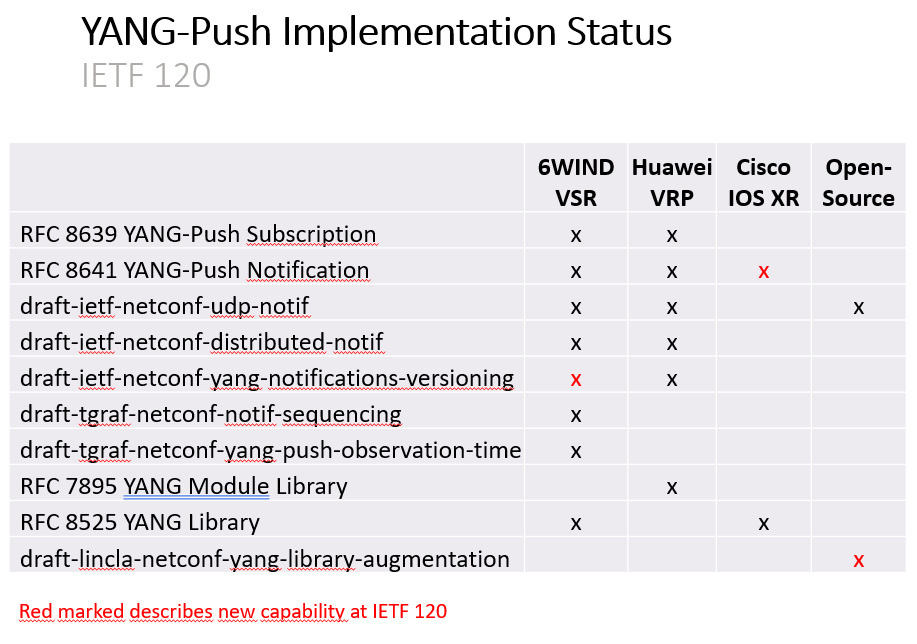

On that front, a multi vendor team led by Thomas Graf tested the different specification drafts that compose the future YANG-Push solution, as THE basis for the data collection that will itself allow data analytics.

For an excellent detailed report on the different drafts, developments, and testing, see Thomas Graf’s summary here.

Anomaly Detection and Incident Management

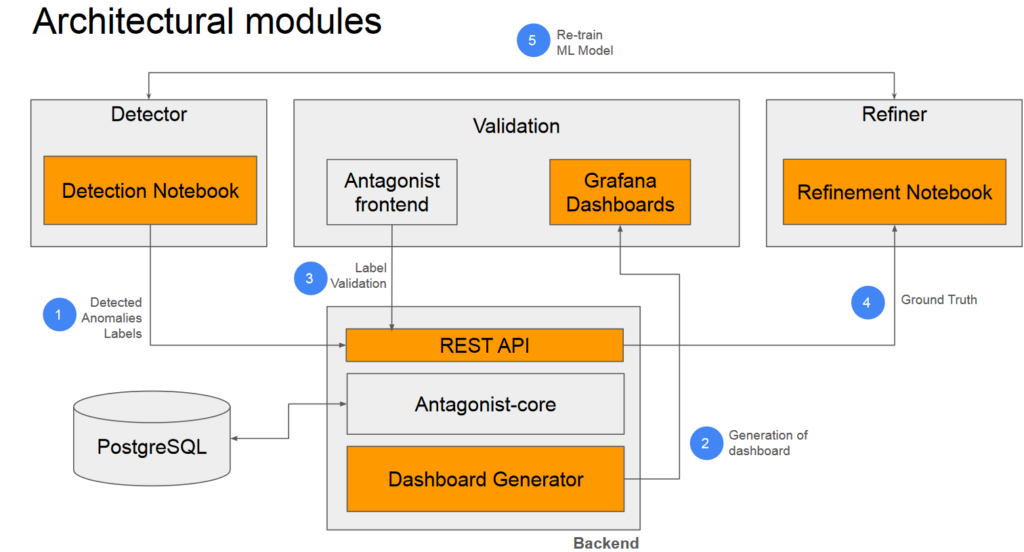

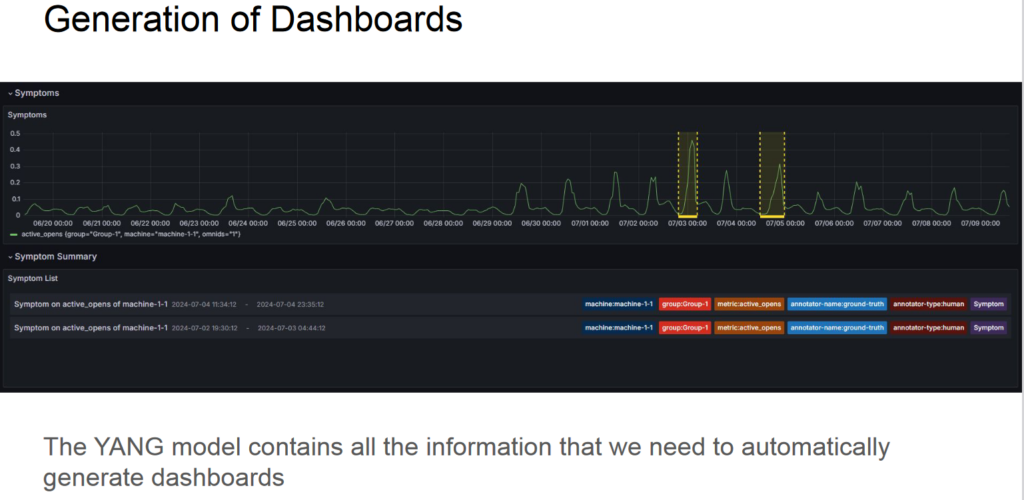

For this experiment, Vince Riccobene lead the Antagonist (Anomaly TAGging ON hiStorical data) project, a label store for network anomaly detectors, … with the end goal to exchange anomaly detection labeled data between groups: different teams within an operator, between operators, and with the vendors.

When looking at the lifecycle of a network anomaly (detection, validation, refinement), this project will be of particular importance in validation and refinement phases. In those phases, the networking industry needs to share collective expertise… hence sharing the labeled data.

Issues Related to Deployment/Usage of YANG Topology Modules (e.g., to Model a Digital Map)

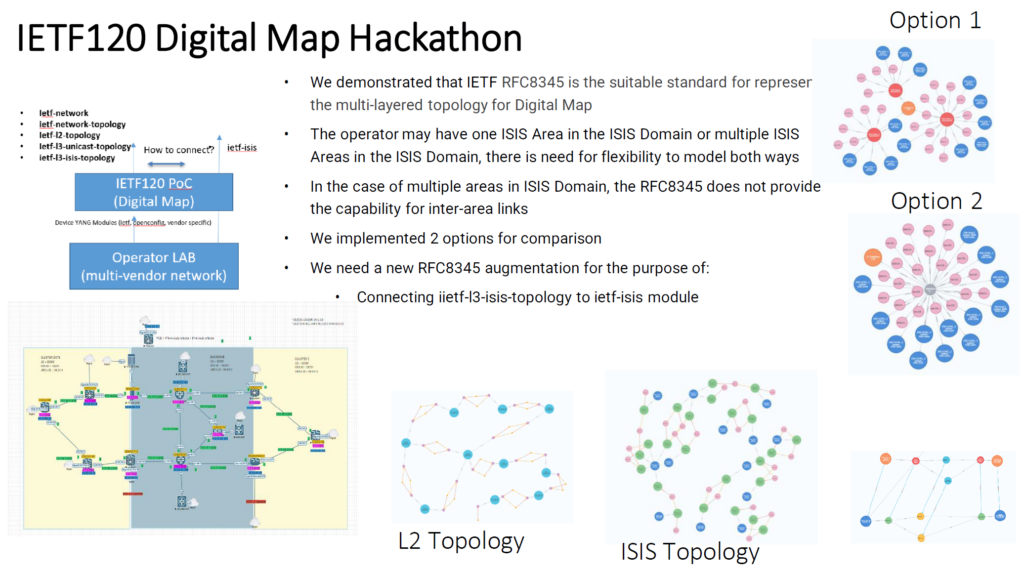

During the hackathon, we demonstrated, based on a multi-vendor test network, that the IETF RFC8345 topology YANG model is the suitable standard for representing the multi-layered topology of Digital Map. Kudos to Olga Havel, for leading the effort.

The team analyzed all RFC8345 augmented YANG modules and drew some useful conclusions for the NMOP WG and the potential RFC8345bis document.

Final Observations

The NMOP experiments require development work, in opensource at the hackathon but also by vendors, to validate the future RFCs. This is the way the IETF used to work, but we have been forgotting about that. Expressed differently, we chose to ignore it, publishing RFCs without any running code. As seen for a couple of IETF meetings, there is hope as the code experiments go hand in hand with the protocol specifications. For the pretty young NMOP WG (only two IETFs), the hackathon high participation is a refreshing feeling.

Finally, let’s not lose sight of the ultimate goal behind all of these OPS experiments: the famous “closed loop” use cases, for which the above experiments (and some others) are requirements. If you assemble all the pieces of the puzzle the right way, it starts to be obvious.

While I just mentioned a few names in this post, many thanks to many people involved. Those on the picture were present onsite … while others were remote. Happy to be part of that (virtual) team.