To solve the next challenge of autonomous networks, it’s not sufficient to configure YANG data models, it’s not sufficient to stream telemetry, it’s important to solve the issue and to close the loop (hence the closed loop action name).

The networking industry dedicated about a decade to standardize network configuration with YANG data models, and then some more time to improve model-driven telemetry to stream telemetry data, based on YANG Push or Openconfig streaming telemetry. It’s now time to combine the two mechanisms, configuration and operational data, to solve the vision of autonomous networks.

The basic next step is to propose closed loop action(s), in case of degraded customer services and simply anomaly detections. The Service Assurance for Intent-based Networking Architecture (SAIN), presented at the IETF, will help in that regard: “it not only helps to correlate the service degradation with symptoms of a specific network component but also to list the services impacted by the failure or degradation of a specific network component. “. The two SAIN drafts, the architecture and the related YANG module, are right now in IETF last call. So, without big surprise, the two documents will become RFCs SOON .

As Lord Kelvin mentioned: “if you can not measure it, you can not improve it”. So, in order to take an “educated” closed loop action, we obviously require (more) network visibility.

We can divide this network visibility into different categories:

- Data plane network visibility: where is traffic is actually going? See our latest IETF hackathon work on SRv6 IPFIX Flow Monitoring.

- Control plane visibility: where the traffic is supposed to go? Typically BGP Monitoring Protocol (BMP).

- Management plane visibility: interface/QoS/environmental/you-name-too/… counters and some topology information, pushed with model-driven telemetry (read YANG push).

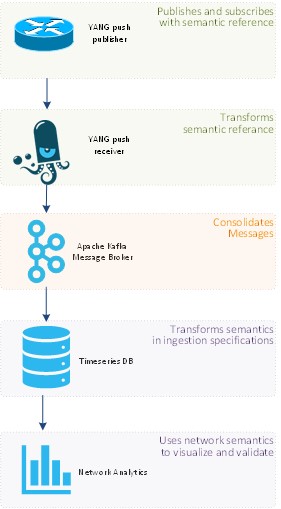

The first two (data plane and control plane visibility) are on right track from a data pipeline point of view, so let’s focus this blog on management plane visibility, with YANG Push. Typically, we stream model-driven telemetry from routers, to a YANG push receiver. From there, the data are forwarded, through a message broker (Apache Kafka being the kind of de facto standard in most operator environments) to a timeseries database for storing, where analytics tools can query and make sense of all the data. In others words, extracting information from data.

What is the issue today? At the end of the data pipeline spectrum, where the data scientists are looking at the data, the data semantic is most of the time lost. Yes, they could ask some questions to the network architects (assuming they know questions to ask, which is already difficult) but the end goal is to rely on some automation. We have been spending a great deal of time to specific the YANG objects semantic, with specific data types: how can we preserve this semantic along the data pipeline chain?

Let’s take the simple example of an ipv4-address, ipv4-prefix, ipv6-address, and ipv6-prefix data types, called typedef in YANG (those specific typedefs are documented in “Common YANG data types, RFC6991). Knowing those clear semantics, as opposed to “stupid” strings is key for data scientists who analyze the data. Without those, data scientists are left with some sort of clustering algorithms (for example, it would group IP addresses along prefixes… a quite obvious concept for any networking engineer).

Bottom line: we must preserve the YANG object semantics in its telemetry journey from the YANG modules inside routers up to collector, then through Apache Kafka, followed by the time series database (TSDB) and finally within the network analytic tool.

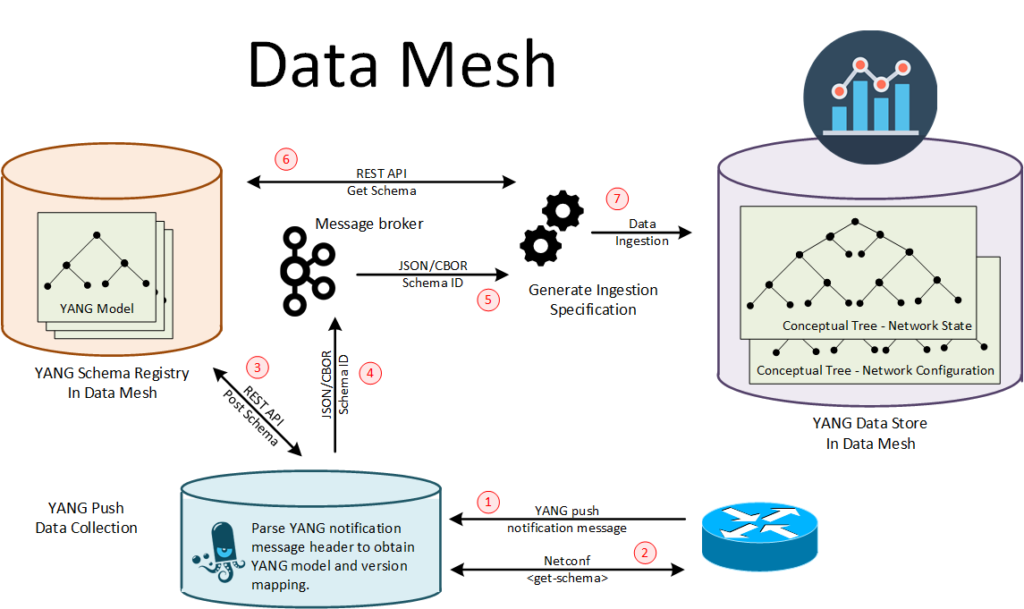

I’m happy to share this new “Network Analytics” project, initiated by Thomas Graf from Swisscom, around a group of passionate engineers combining operators, vendors, Confluent/kafka, timeseries DB, and analytics. This project introduces a new architecture, based on YANG Push, which will enable automated data processing and data mesh integration, while preserving the data semantic along the path. Naturally, this functionality will facilitate the easy onboarding of YANG-managed devices.

In big letter in this above picture, you can read “Data Mesh”, which is used as guiding principles.

We started our effort at this IETF 115 with drafts and hackathon. However, we already have plans for IETF 116 and IETF 117 hackathons. Thomas Graf’s Network Analytics @ IETF 115 recent post nicely highlights some of the achievements and nicely complements this blog with more information and related pointer to IETF drafts.

How to kick off this project? Well, with a “YANG + Kakfa” special cake…

This is the beginning of a longer and well planned journey…

Finally, you might be enjoying this recorded panel on “Telco Networks and Data Mesh becoming one“, with Thomas Graf, Kai Waehner, Eric Tschetter, Paolo Lucente, Pierre Francois live. As Thomas puts it:

we bring YANG, the data modelling language for networks into Apache Kafka, the Data Mesh de-facto standard … Enabling network operators to onboard data in minutes and network/analytic vendors to integrate existing Big Data environments. One step closer to Closed Loop operated networks.